GUI-ReWalk: Massive Data Generation for GUI Agent via Stochastic Exploration and Intent-Aware Reasoning

Abstract

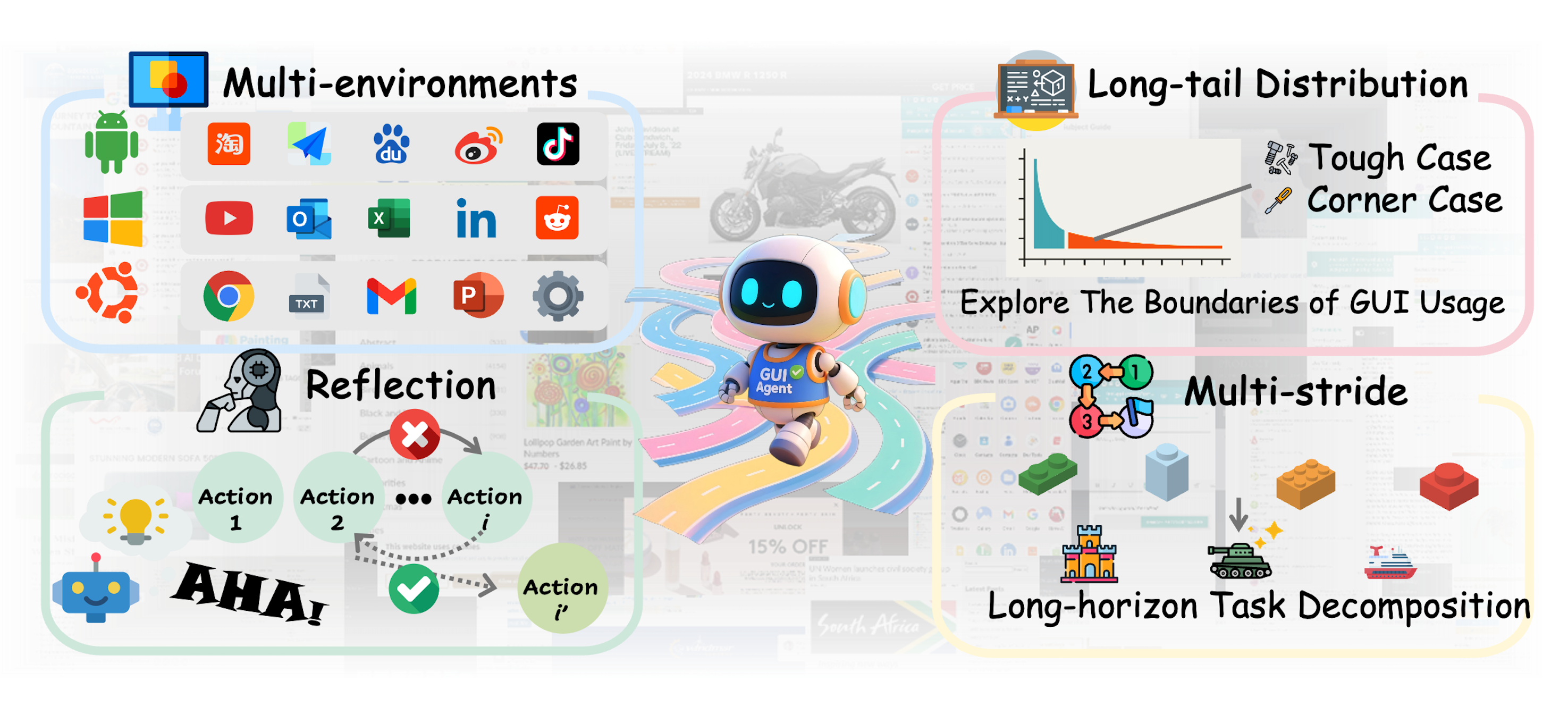

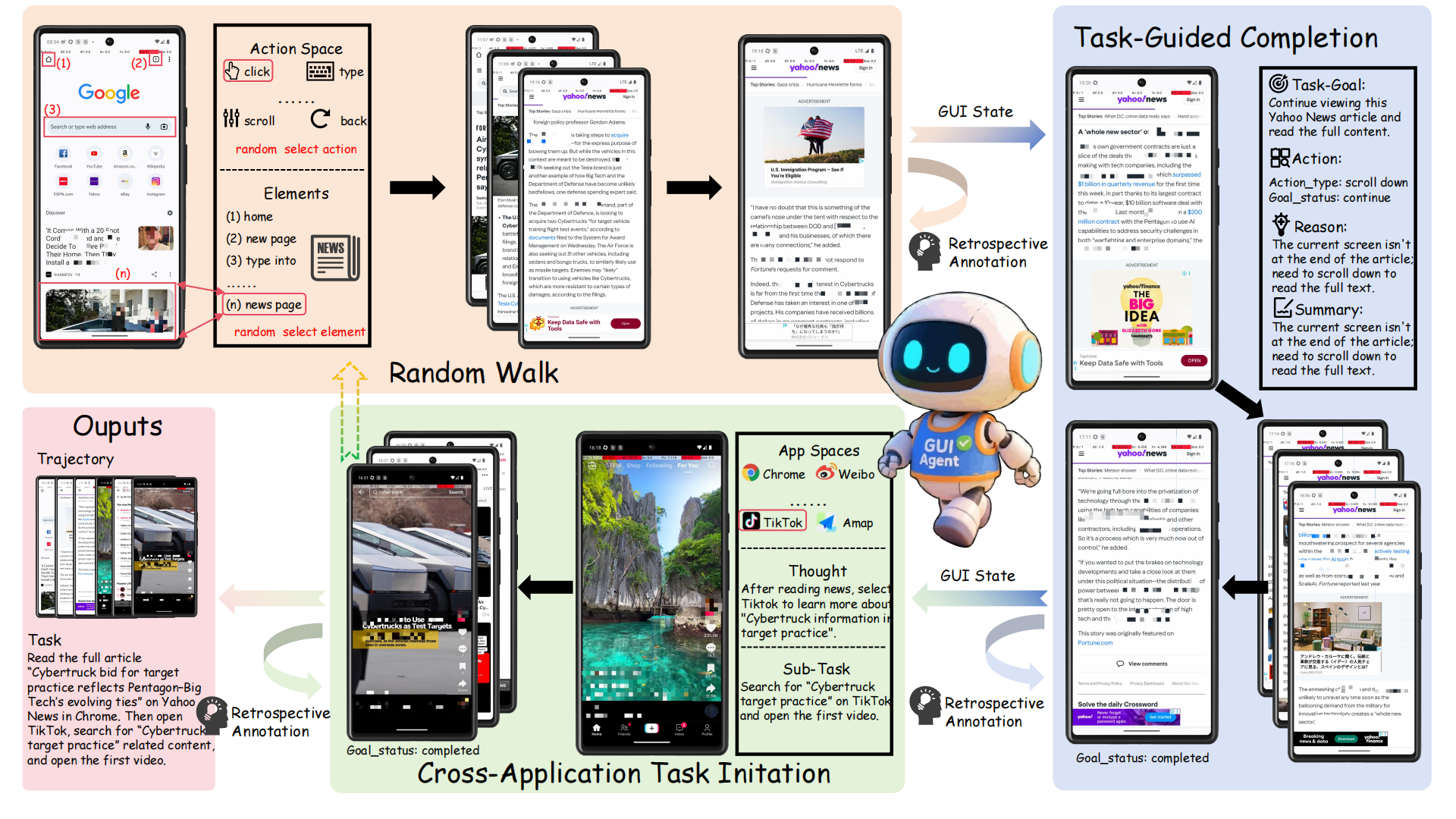

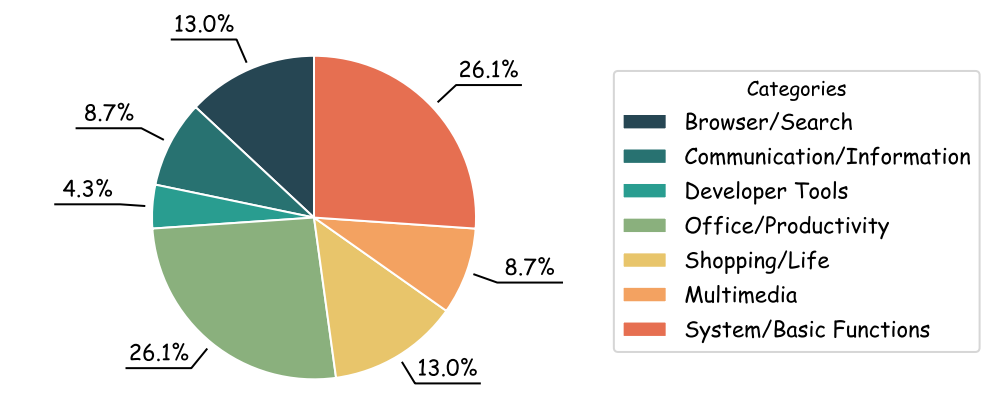

Graphical User Interface (GUI) Agents, powered by large language and vision-language models, hold promise for enabling end-to-end automation in digital environments. However, their progress is fundamentally constrained by the scarcity of scalable, high-quality trajectory data. Existing data collection strategies either rely on costly and inconsistent manual annotations or on synthetic generation methods that trade off between diversity and meaningful task coverage. To bridge this gap, we present GUI-ReWalk: a reasoning-enhanced, multi-stage framework for synthesizing realistic and diverse GUI trajectories. GUI-ReWalk begins with a stochastic exploration phase that emulates human trial-and-error behaviors, and progressively transitions into a reasoning-guided phase where inferred goals drive coherent and purposeful interactions. Moreover, it supports multi-stride task generation, enabling the construction of long-horizon workflows across multiple applications. By combining randomness for diversity with goal-aware reasoning for structure, GUI-ReWalk produces data that better reflects the intent-aware, adaptive nature of human-computer interaction. We further train Qwen2.5-VL-7B on the GUI-ReWalk dataset and evaluate it across multiple benchmarks, including Screenspot-Pro, OSWorld-G, UI-Vision, AndroidControl, and GUI-Odyssey. Results demonstrate that GUI-ReWalk enables superior coverage of diverse interaction flows, higher trajectory entropy, and more realistic user intent. These findings establish GUI-ReWalk as a scalable and data-efficient framework for advancing GUI agent research and enabling robust real-world automation.

Comparison of GUI-ReWalk and Other GUI Datasets

| Dataset | Env. | Ann. | Dom/AxT. | Thoughts | Tasks | Avg.Step |

|---|---|---|---|---|---|---|

| AndroidControl | Mobile | Human | ✔ | Short | 15283 | 5.5 |

| AMEX | Mobile | Human | ✘ | ✘ | 2991 | 11.9 |

| AitW | Mobile | Human | ✔ | ✘ | 2346 | 8.1 |

| AitZ | Mobile | Human | ✘ | Short | 1987 | 6.0 |

| GUI-Odyssey | Mobile | Human | ✘ | ✘ | 7735 | 15.3 |

| OS-Genesis | Mobile & Web | Model | ✔ | Short | 2451 | 6.4 |

| WonderBread | Web | Human | ✔ | ✘ | 598 | 8.4 |

| AgentTrek | Web | Model | ✔ | Short | 10398 | 12.1 |

| Mind2Web | Web | Human | ✔ | ✘ | 2350 | 7.3 |

| GUIAct | Web | Human | ✔ | ✘ | 2482 | 6.7 |

| AgentNet | Desktop | Human | ✔ | Long | 22625 | 18.6 |

| GUI-ReWalk (Ours) | Mobile & Desktop | Model | ✔ | Long | 50k+ | 22.5 |

Experimental Results

▶ Grounding Capability

Results on Screenspot-Pro benchmark.

| Model | CAD | DEV | Creative | Scientific | Office | OS | Avg | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Text | Icon | Text | Icon | Text | Icon | Text | Icon | Text | Icon | Text | Icon | Text | Icon | Avg | |

| GPT-4o | 2.0 | 0.0 | 1.3 | 0.0 | 1.0 | 0.0 | 2.1 | 0.0 | 1.1 | 0.0 | 0.0 | 0.0 | 1.3 | 0.0 | 0.8 |

| SeeClick-9.6B | 2.5 | 0.0 | 0.6 | 0.0 | 1.0 | 0.0 | 3.5 | 0.0 | 1.1 | 0.0 | 2.8 | 0.0 | 1.8 | 0.0 | 1.1 |

| OA-Atlas-7B | 12.2 | 4.7 | 33.1 | 1.4 | 28.8 | 2.8 | 37.5 | 7.3 | 33.9 | 5.7 | 27.1 | 4.5 | 28.1 | 4.0 | 18.9 |

| UGground-7B | 14.2 | 1.6 | 26.6 | 2.1 | 27.3 | 2.8 | 31.9 | 2.7 | 31.6 | 11.3 | 17.8 | 0.0 | 25.0 | 2.8 | 16.5 |

| UI-TARS-1.5-7B | 49.2 | 17.2 | 56.5 | 15.9 | 60.1 | 14.7 | 74.3 | 24.5 | 81.4 | 43.4 | 55.1 | 18.0 | 62.7 | 20.0 | 46.4 |

| Qwen2.5-VL-7B | 17.2 | 3.1 | 35.1 | 2.1 | 23.2 | 6.3 | 36.1 | 6.4 | 41.8 | 11.3 | 28.0 | 13.5 | 29.7 | 6.5 | 20.8 |

| GUI-ReWalk-7B (ours) | 35.0 | 17.9 | 46.8 | 11.0 | 40.9 | 9.8 | 60.4 | 28.2 | 56.5 | 28.3 | 39.2 | 19.1 | 46.2 | 17.2 | 35.1 |

Results on OS-World-G benchmark.

| Model | Text Matching | Element Recognition | Layout Understanding | Fine-grained Manipulation | Refusal | Avg |

|---|---|---|---|---|---|---|

| UGground-7B | 51.3 | 40.3 | 43.5 | 24.8 | - | 36.4 |

| UI-TARS-1.5-7B | 59.8 | 43.0 | 50.6 | 37.6 | - | 47.5 |

| Qwen2.5-VL-7B | 23.0 | 15.5 | 19.0 | 11.4 | - | 16.8 |

| GUI-ReWalk-7B (ours) | 35.2 | 30.0 | 31.2 | 16.1 | - | 27.5 |

▶ Navigation Capability

Results on AndroidControl and GUI-Odyssey benchmarks.

| Model | AndroidControl-Low | AndroidControl-High | GUI-Odyssey | |||

|---|---|---|---|---|---|---|

| Type Acc. | SR | Type Acc. | SR | Type Acc. | SR | |

| GPT-4o | 74.3 | 19.4 | 66.3 | 20.8 | 34.3 | 3.3 |

| SeeClick-9.6B | 93.0 | 75.0 | 82.9 | 59.1 | 71.0 | 53.9 |

| OS-Atlas-7B | 93.6 | 85.2 | 85.2 | 71.2 | 84.5 | 62.0 |

| OS-Genesis-7B | 90.7 | 74.2 | 66.2 | 44.5 | -- | -- |

| Qwen2.5-VL-7B | 91.8 | 85.0 | 70.9 | 69.8 | 59.5 | 46.3 |

| GUI-ReWalk-7B (ours) | 91.7 | 96.3 | 73.1 | 66.2 | 69.6 | 64.2 |

Notes:

- Type Acc.: Type Accuracy

- Step SR: Step Success Rate

BibTeX

@misc{lin2025guirewalkmassivedatageneration,

title={GUI-ReWalk: Massive Data Generation for GUI Agent via Stochastic Exploration and Intent-Aware Reasoning},

author={Musen Lin and Minghao Liu and Taoran Lu and Lichen Yuan and Yiwei Liu and Haonan Xu and Yu Miao and Yuhao Chao and Zhaojian Li},

year={2025},

eprint={2509.15738},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2509.15738},

}